We’re in the middle of an AI summer—hundreds of new papers in AI are published every week, while some folks in the media and Silicon Valley are breathlessly proclaiming that Large Language Models (LLMs) have practically reached “human-level intelligence” with AGI lurking just around the corner. But there’s the elephant in the room: scientists across all disciplines concerned with the nature of intelligence agree that we don’t really know what we mean by “intelligence”, never mind “Artificial General Intelligence” or AGI.

Despite this conceptual uncertainty, OpenAI proposes the following definition of AGI on their website:

…artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work.

(Notice this is a single sentence where the central word is “outperform”).

However, this single-sentence definition raises a fundamental concern. Reducing intelligence to performance measures on specific tasks is scientifically misleading, philosophically shallow and ultimately a disservice to a society that must improve AI and algorithmic literacies. Intelligence is not about outperforming humans at predefined goals; it’s about finding novel ways of thinking, adapting to unexpected challenges, and generating ideas that may have no immediate utility. Focusing on whether machines outperform humans puts forward a narrow, competitive view of AI development. Actual progress lies not in surpassing human abilities but in augmenting them in ways that open up new possibilities for understanding and action. Indeed, this definition risks exacerbating the current AI hype by presenting intelligence as a measurable commodity rather than a profound and multifaceted phenomenon, perpetuating misconceptions about what AI can and cannot do.

Why challenge OpenAI’s definition of AGI?

After all, maybe current fast technological advances are calling for a pragmatic, measurable, and unambiguous conceptualization, and might also make it easier to deliver these kinds of AGI to society. Well, not so fast.

A performance-driven conception of intelligence that merely tracks whether machines can surpass humans in specific tasks encourages a “black box” mindset. In this mindset, the underlying mechanisms behind an AI system’s behaviour become secondary or irrelevant through algorithmic opaqueness. As long as the system meets or exceeds benchmarks, the nature of its internal processes is overlooked.

If the underlying mechanisms were transparent and interpretable—rather than hidden in a black box—we could openly discuss the limitations of current AI systems, even those that outperform humans in certain domains. In doing so, much of the AGI hype would likely dissipate. We’d be able, for instance, to examine precisely which representations an AI model employs and judge whether they are suitable for the type of reasoning task it aims to solve.

The performance approach to AGI is dangerous for several reasons. In this post I outline arguments on algorithmic opacity (black box) and the need for representational (abstraction) diversity, which I will elaborate on in future posts.

1. Algorithmic opacity.

Developers, stakeholders, and society at large may sideline transparency, interpretability, and accountability when performance is the goal.

We often cannot explain why a model produces a specific output in black-box AI systems. This opacity makes it difficult to identify errors, biases, or malicious behaviours, leading to potential harm or misuse without clear pathways for mitigation.

When confronted with black-box systems, people naturally attempt to make sense of what they cannot see by creating narratives or attributing unseen motives to the system’s behaviour. Without transparent, mechanistic explanations, we fill the gap with our own reasoning—often borrowing from cultural scripts about fate, luck, or otherworldly intervention. This can lead to anthropomorphizing algorithms or interpreting their recommendations as divinely inspired “messages,” rather than products of statistical processes.

Indeed, such opacity is fuelling the emergence of supernatural beliefs about the AI personalization algorithms used by TikTok (and other social media platforms), and influencers are exploiting it. See, for example, Kelly Cotter’s work in “algorithmic conspirituality”.

One might assume that reducing algorithmic opacity undercuts the raw performance prized by OpenAI’s AGI definition. Yet, in “Why Are We Using Black Box Models in AI When We Don’t Need To? A Lesson From an Explainable AI Competition”, Cynthia Rudin and Joanna Radin argue against the assumption that opacity is a prerequisite for high performance. It highlights that the reliance on black box models is often unjustified and that interpretable models can achieve similar accuracy without sacrificing transparency.

In short, when performance alone reigns supreme, we risk normalizing opaque models that fuel misconceptions, hamper accountability, and even spawn quasi-religious narratives. There is compelling evidence that we can prioritize more transparent, interpretable approaches without sacrificing performance. This will curb the dangers outlined above and lay a solid foundation for tackling the deeper challenge of how AI represents and reasons about the world—a challenge I explore next through the lens of abstraction diversity below.

2. Lack of grounded understanding.

Human intelligence involves domain-specific (e.g. geometry, music, language), contextual reasoning and the formation of abstract concepts that can be generalized and applied to other domains e.g. from geometry to chemistry. These qualities allow humans to adapt flexibly to new problems. While current LLMs achieve impressive performance by recognizing statistical patterns across vast datasets, they cannot form diverse, robust abstractions for different domains. Instead of reasoning with different abstractions, as humans do, they rely on a singular, general pattern-matching approach over vector spaces. Thus, overemphasizing performance on benchmarks hides this crucial limitation and can create the illusion of deeper intelligence where there is only statistical pattern matching paired with rule-based systems.

Domain specificity, abstract concepts, conceptual spaces…

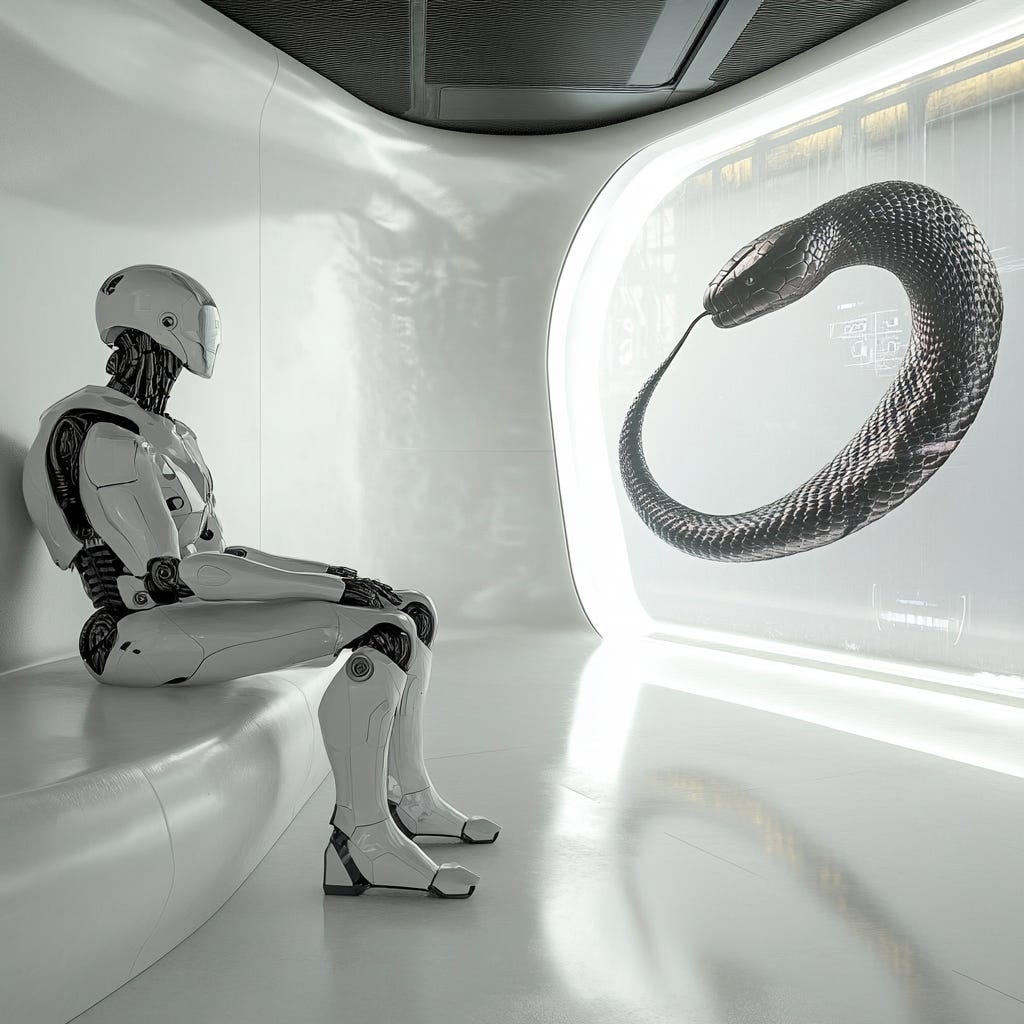

In one of her books, “The creative mind: myths and mechanisms” Margaret Boden recalls a problem mid-19th century chemists struggled with: understanding how six carbon atoms in benzene could be arranged in a stable molecule. Friedrich August Kekulé famously had a dream of a snake biting its own tail, inspiring him to propose a ring structure instead of a straight-chain molecule. Boden uses this story to make a critical point about humans’ creative problem-solving mechanisms connected with our ability to work with abstract representations.

The early organic chemists worked with a conceptual space, the foundation of which can be conceived as a Lego game of sorts. The idea was to connect atoms (Lego pieces with variable numbers of plugs) into stable molecules with no plug left disconnected. Like in language, that specific Lego game allowed for accepting only linear structures similar to words and sentences. The working model was that molecules had a starting atom on the left and an ending atom on the right of a chain structure.

As expected, when nineteenth-century organic chemists tried to model benzene (C₆H₆), they initially treated atoms like Lego pieces that could be arranged only in linear chains. However, the known chemical properties of benzene stubbornly resisted every linear arrangement. This defied the capacity to make valuable inferences in that domain, and chemists explained the lack of solution as an insufficient exploration of all possible linear structures or that the known properties of benzene had been misinterpreted. Neither of these hypotheses is true. This was realized by Friedrich August Kekulé, who sparked a breakthrough that solved the problem by envisioning a ring structure.

Kekulé reportedly dreamed of a snake biting its own tail, forming a circle. This unusual, almost playful metaphor inspired him to reimagine carbon atoms linking end to end in a ring. Such metaphorical thinking—akin to play—allowed him to break free from the rigid, linear “Lego” mindset, the conceptual space driving the reasoning in organic chemistry at the time. In Margaret Boden’s terms, Kekulé transformed that conceptual space by introducing a new structural possibility into the abstraction system.

Before Kekulé, chemists were effectively stuck with only linear molecule representations. The notion of a closed ring was novel and counterintuitive—precisely what you’d expect from a genuine conceptual shift. This example illustrates how cognitive flexibility—the ability to step outside familiar frameworks (and abstractions)—can solve “impossible” problems precisely because it allows us to invent new abstractions and inference rules that apply to them.

The ideas about the role of different forms of abstraction and inferential rules were also part of AI development. Indeed, during one of the AI winters back in 1993, Davis, Shrobe, & Szolovits wrote a paper that was one of the reasons why I pursued a doctoral degree in AI at Edinburgh University.

The paper is entitled “What is a knowledge representation?”

Their ideas about knowledge representation in AI systems are compatible with and extend Boden’s conceptual spaces. In Davis et al.’s view, a knowledge representation in AI is defined as,

A surrogate: a stand-in for the real world, allowing the system to reason about what’s “out there” without direct physical interaction.

A set of ontological commitments: KR imposes an organization on what the system deems relevant about the world the AI agent interacts with.

A fragmentary theory of intelligent reasoning: the representation encodes how an AI agent can draw inferences, form hypotheses, and so on.

A medium for pragmatic computation: it provides the structures that let an AI agent do computations efficiently.

A medium of expression: KR should be inspectable and explainable to humans.

Crucially, Davis et al. stress that abstraction diversity—the capacity to use multiple representational strategies (e.g. rules, semantic networks, frames, conceptual graphs)—is indispensable in AI. Different tasks (diagnosis, planning, analogy-making, creative design, etc.) require different knowledge representation modes.

Contemporary large language models (like GPT-3, GPT-4, etc.) rely on a single massive statistical embedding space, usually learned through transformer architectures. While these models capture correlations across tokens in massive text corpora, they do not:

Adopt multiple representational formalisms: LLMs do not internally switch between, say, logical proposition structures, object-centric frames, or probabilistic graphs.

Commit to explicit ontologies that can be inspected and revised modularly.

Provide fully transparent theories of how they reach inferences, making it difficult to analyse or adapt their “reasoning.”

This means that LLM “knowledge” is effectively stored and deployed through vast parameter matrices, which are opaque—even to their creators. Although they may score high on many benchmarks (e.g., performance tasks), they do not exhibit the sort of representational flexibility that Davis et al. view as fundamental to intelligence.

Boden’s notion of conceptual space (or maps of the mind) defines creativity as the exploration and transformation of the generative systems we obtain from a set of ontological commitments realised in an abstraction (Lego, maths, music, organic chemistry and so on).

True (general) intelligence, in her view, involves:

Diverse abstractions: the ability to shift conceptual frameworks, or create entirely new ones.

Inspection and reflection: understanding (at some level) one’s own representations so they can be reworked.

Genuine novelty: systematically altering rules and categories to discover or invent unforeseen ideas.

While LLMs can mimic creative output by sampling from patterns in training data, they do not restructure their own conceptual spaces at a deep level because they do not have a diversity of representational abstractions. They apply essentially the same statistical method—predicting next tokens—across any domain.

In short, conflating “outperforming humans” with “general intelligence” glosses over what makes minds—human or artificial—truly remarkable: the capacity to develop, revise, and even invent new representations that reframe how we see problems. As Margaret Boden reminds us, creativity arises when conceptual spaces are transformed, not just exploited. Meanwhile, Davis, Shrobe, and Szolovits stress that flexible, transparent knowledge representations are vital for real-world AI. With LLMs, we see impressive performance, but we also see opaque mechanisms, single-format embeddings, and limited conceptual diversity. If we aspire to a truly general intelligence, it’s not enough to chase benchmarks; we must cultivate systems that understand, explain, and create in ways that reflect the profound complexity of intelligence itself.